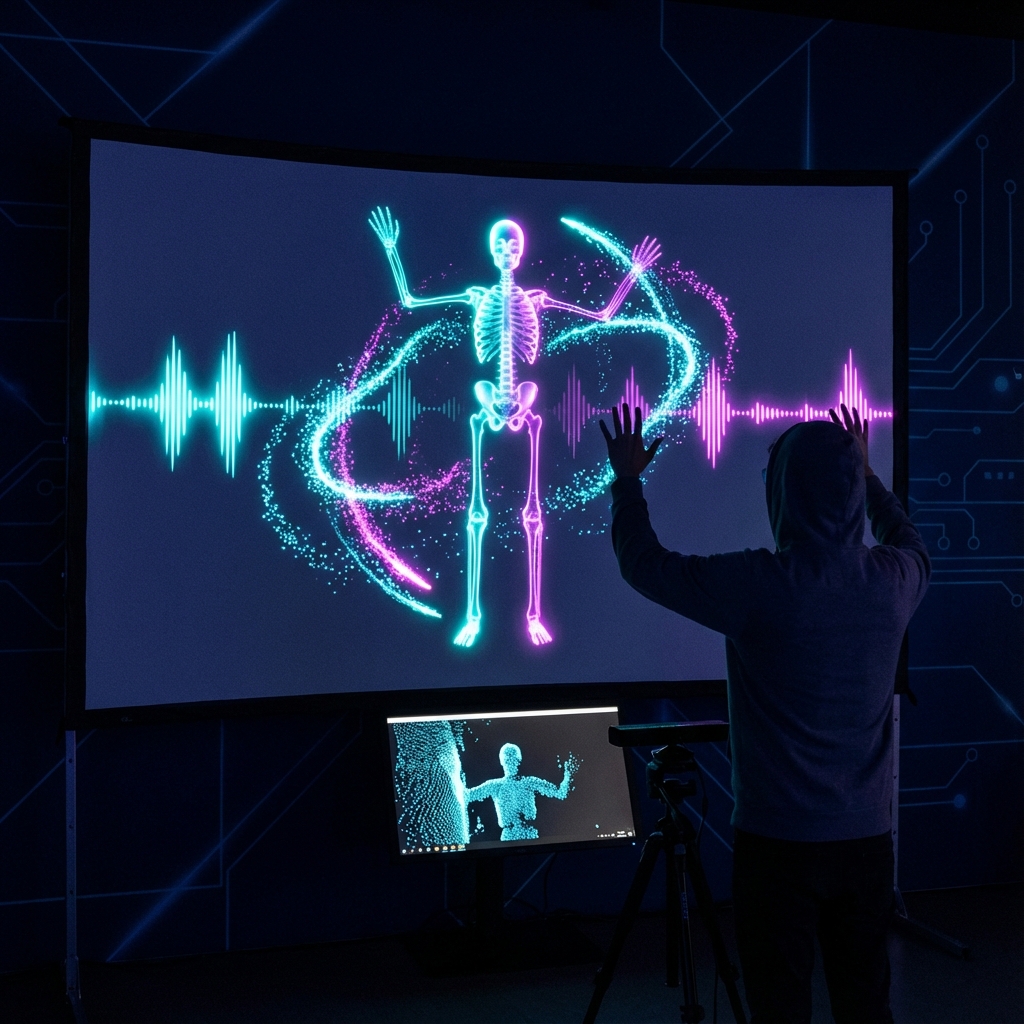

Kinect-to-Music Performance Engine

Custom TouchDesigner component library for live music performances using Azure Kinect body tracking. Processes 32 skeletal joints at 30fps with predictive smoothing. Maps gestures, arm angles, and movement velocity to MIDI/OSC outputs. Features training mode for calibration and concert mode for low-latency performance. Used in 5+ Visual Arena events.

⚡ Challenges

- •Sub-frame latency for musical timing

- •Gesture recognition reliability

- •Performer-friendly calibration

✓ Outcomes

- ✓Used in 5+ live performances

- ✓Open-sourced component library

- ✓Adopted by 3 other artists

📖 Full Details

This custom-built TouchDesigner component library transforms Azure Kinect body tracking data into expressive performance parameters for controlling music and generative visuals. Designed for live artistic performances, the system provides intuitive mapping between human movement and audio-visual output with the low latency essential for performative responsiveness.

The library begins with skeletal data from Azure Kinect's body tracking SDK, receiving 32 joint positions per frame at 30fps. Raw tracking data passes through custom smoothing algorithms that eliminate jitter while preserving intentional movements—using predictive filtering that adapts smoothing intensity based on motion velocity.

Mapping tools provide flexible connections between body parameters and output controls. Predefined gestures include arm raising, leaning, crouching, and hand proximity to body zones. Continuous parameters like arm angles, movement velocity, and body expansion/contraction drive analog controls. Zone-based triggers activate when hands enter defined spatial regions.

Output destinations include MIDI for hardware synthesizers, OSC for software instruments and VJ software, and direct internal TouchDesigner parameters for generative visuals. The modular architecture enables performers to build custom mapping configurations without coding.

Performance modes provide varying degrees of expressiveness—training mode establishes comfortable range of motion for calibration, concert mode applies full mapping with optimized latency, and demo mode adds visual feedback showing tracked body and active zones.

The library has been used in multiple live performances at Visual Arena and cultural events, enabling musicians without technical background to incorporate motion control into their artistic practice.